# PairLIE 论文详解

论文为 2023CVPR 的 Learning a Simple Low-light Image Enhancer from Paired Low-light Instances. 论文链接如下:

openaccess.thecvf.com/content/CVPR2023/papers/Fu_Learning_a_Simple_Low-Light_Image_Enhancer_From_Paired_Low-Light_Instances_CVPR_2023_paper.pdf

# 出发点

1.However, collecting high-quality reference maps in real-world scenarios is time-consuming and expensive.

出发点 1:在低光照领域,从现实世界中获取高质量的参考照片进行监督学习,既费时又困难,成本昂贵。

因为获得低光环境的照片是容易的,而此低光照片对应的亮度较大的参考图片是难得的。

2.To tackle the issues of limited information in a single low-light image and the poor adaptability of handcrafted priors, we propose to leverage paired low-light instances to train the LIE network.

Additionally, twice-exposure images provide useful information for solving the LIE task. As a result, our solution can reduce the demand for handcrafted priors and improve the adaptability of the network.

出发点 2:为了解决手动设置的先验的低适应性,减少手动设置先验的需求,同时提升模型对陌生环境的适应性。

# 创新点

The core insight of our approach is to sufficiently exploit priors from paired low-light images.

Those low-light image pairs share the same scene content but different illumination. Mathematically, Retinex decomposition with low-light image pairs can be expressed as:

创新点 1:作者利用两张低光图片进行训练,以充分提取低光图片的信息。

instead of directly imposing the Retinex decomposition on original low-light images, we adopt a simple self-supervised mechanism to remove inappropriate features and implement the Retinex decomposition on the optimized image.

创新点 2:作者基于 Retinex 理论,但是并不循旧地直接运用 Retinex 的分解。作者采用一个简单的自监督机制以实现不合理特征的去除(通常是一些噪音)以及更好地实现 Retinex 理论。

# 模型

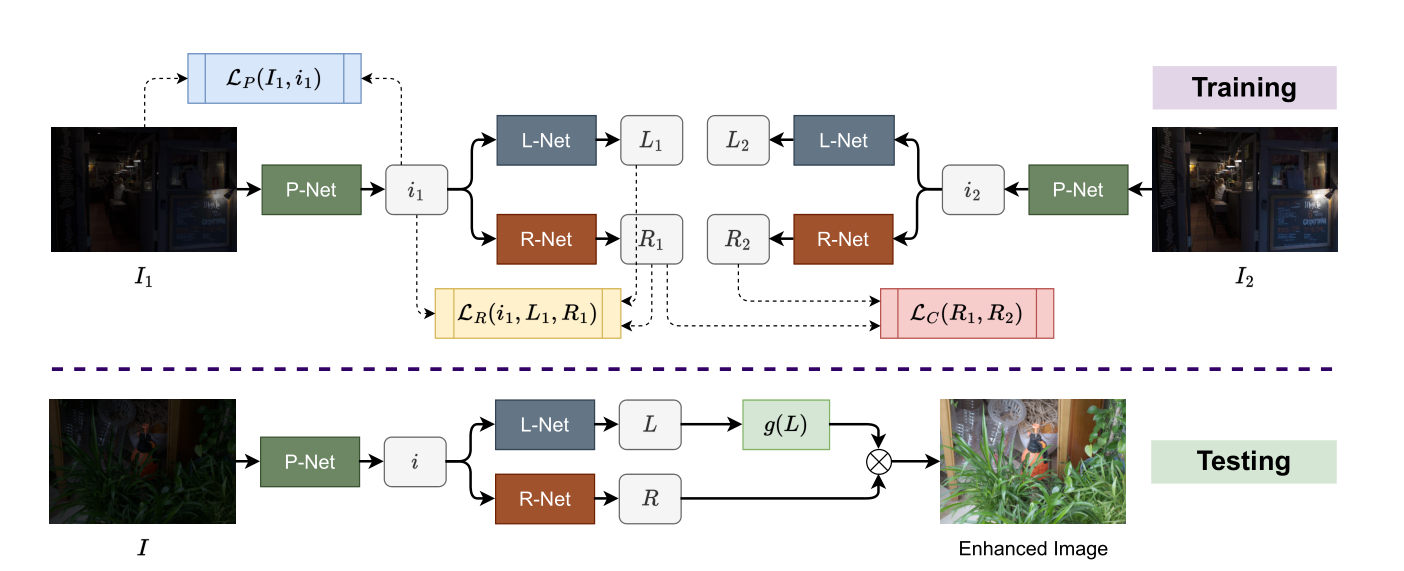

将两张同一场景不同曝光的低光图片送入训练中,图片 I1 与 I2 先经过 P-Net 去除噪音,得到 i1 与 i2,然后利用 L-Net 与 R-Net 分解为照度 L1 与反射 R1(对应有 L2 与 R2)。

在测试,只需要输入一张低光照图片 I,经过 P-Net 的噪音去除,得到 i,然后用 L-Net 与 R-Net 分解为照度和反射,然后对照度 L 进行增强,操作为 g (L),把增强结果与反射 R 进行元素乘法,得到增强后的图片 Enhanced Image。

# 设计及其损失

Note that, this paper does not focus on designing modernistic network structures. L-Net and R-Net are very similar and simple,

1. 模型使用的 L-Net 与 R-Net 十分简单。整体架构只是单纯的卷积神经网络。

Apart from L-Net and R-Net, we introduce P-Net to remove inappropriate features from the original image. Specifically, the structure of the P-Net is identical to the R-Net.

2,P-Net 被设计用于去除不合理特征。

Note that the projection loss needs to cooperate with the other constraints to avoid a trivial solution.i,e.,i1 = I1.

3.Projection Loss:最大程度限制去除不合理特征后的 i1 和原始低光图片 I1 的区别。

这个损失需要避免一个特例,即降噪后图片与原图相同,即未降噪。

Since sensor noise hidden in dark regions will be amplified when the contrast is improved.

In our method, the sensor noise can be implicitly removed by Eq. 1.

4.Reflection Loss:通常用传感或摄影设备拍摄低光场景照片会携带一定的设备噪音,这个损失最大限度保证两张图片的反射是相同的,减少传感或摄影设备的影响,这是因为图片场景的内容相同。

这个损失是确保反射的一致性。

is applied to ensure a reasonable decomposition.

is to guide the decomposition.

Specifically, the initialized illumination L0 is calculated via the maximum of the R, G, and B channels:

5.Retinex Loss:Retinex 损失是为了限制分解组块 L-Net 和 R-Net 以满足 Retinex 的理论要求。

本文毕